Jan 20, 2026

Table of contents

When an autonomous agent makes a mistake, how do you audit the neural network?

Crisis of Cognitive Continuity: Challenges in the Traditional Enterprise Architecture

The Context Graph: An AI "Flight Recorder" - Addressing challenges effectively

The MoolAI Gateway Advantage: Turning Exhaust into Intelligence

Security Is Not Just a Filter; It’s Provenance

Conclusion

The enterprise AI conversation is shifting. We are moving past the era of "Chat with your PDF" and entering the era of Agentic AI systems that not only summarize text but also execute workflows, approve transactions, and make decisions.

But this shift brings a terrifying question for CISOs and Compliance Officers

When an autonomous agent makes a mistake, how do you audit the neural network?

You cannot subpoena a weight matrix. You cannot ask a floating-point number why it approved a discount that violated policy. To deploy agents safely, we need to move from "Black Box" magic to "Glass Box" transparency. We need a new architectural primitive: the Context Graph.

This article educates about the necessity of reasoning, path retrieval, and PII masking that are lacking in traditional LLMs and how context graphs address the challenges. Also learn about MoolAI's competency to deliver an accountability-driven Enterprise AI solution that is safe, reliable, and Governance-ready.

Crisis of Cognitive Continuity: Challenges in the Traditional Enterprise Architecture

Traditional enterprise architecture relies on Systems of Record (like Salesforce or SAP). These are excellent at telling you the current state of the world (e.g., "Deal Closed"). But they have a critical flaw: they are amnesiacs regarding the process. They discard the "decision trace"—the inputs, the specific policy version checked, the exceptions granted, and the reasoning used to reach that state.

When you connect a standard LLM to a System of Record, you get an agent that sees what, but is blind to the why. It sees that a discount was given, but not that it was a one-time exception for a strategic partner. This "context blindness" leads to agents that confidently repeat past mistakes or hallucinate precedents that don't exist.

To solve the inability of traditional LLMs to track the history of decisions taken with precise reasoning, advocates for an advanced system, a Context Graph.

Unlike a static Knowledge Graph that maps relationships (CEO -> Company), a Context Graph is dynamic. It functions as an immutable "Flight Recorder" for your AI agents. It captures the Decision Trace of every interaction:

The Inputs: What data did the agent see at that exact millisecond?

The Logic: Which specific policy or guardrail was active?

The Reasoning: Why did the model choose Path A over Path B?

The Outcome: What action was taken?

This transforms AI from a liability into a legally defensible asset. If an agent denies a loan or exposes sensitive data, you don't have to guess what happened. You can traverse the graph and replay the decision.

The Context Graph: An AI "Flight Recorder" - Addressing challenges effectively

The MoolAI Gateway Advantage: Turning Exhaust into Intelligence

The challenge, historically, has been implementation.

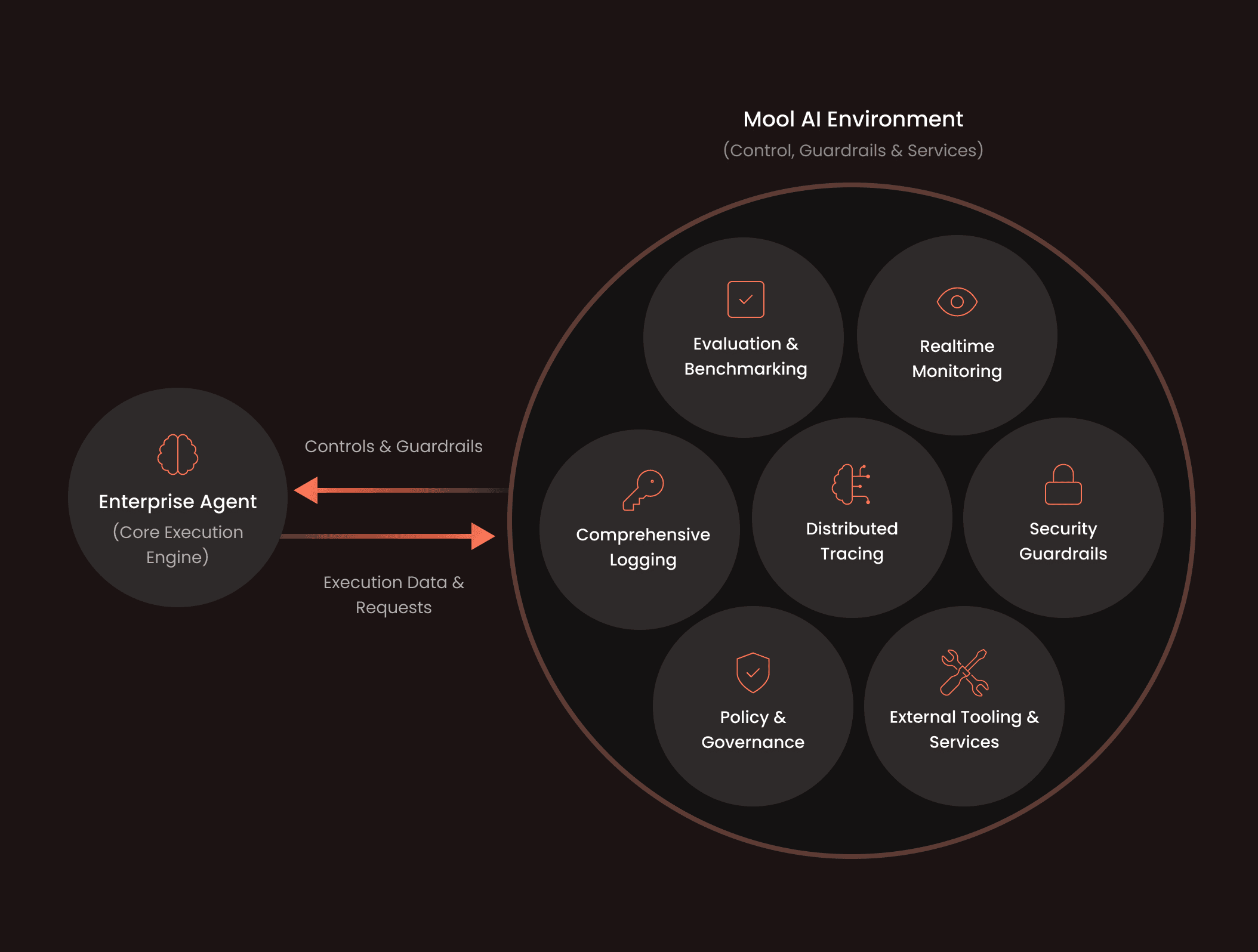

Building a Context Graph is usually hard work. It requires rewriting apps to log events. This position leverages MoolAI’s architecture as an AI Gateway. Because MoolAI sits upstream (between the user and the model), you see every interaction.

While competitors force you to manually feed data into their vector stores, MoolAI’s unique position allows us to passively construct this graph from the "exhaust" of daily operations. We observe the prompt, the retrieved context, the PII masking events, and the model's output in real-time. We stitch these disparate signals into a coherent "Decision Graph" without requiring you to refactor your entire application stack.

Security Is Not Just a Filter; It’s Provenance

This brings us back to the core differentiator to architect a foolproof security system, ensuring privacy and preventing potential data leaks.

Most "wrapper" solutions rely on downstream filters or reactive monitoring. They are trying to catch a leak after the model has already generated it. MoolAI’s "Glass Box" approach is different. By maintaining a Context Graph within your secure perimeter, we provide:

Deterministic Guardrails: The agent doesn't just "try" to be safe; it checks the Context Graph for approved precedents before acting.

Auditability: In regulated industries, "the model hallucinated" is not a valid legal defense. "Here is the decision trace linking the output to this specific policy document."

Data Sovereignty: Your "Decision Graph" contains your company’s most sensitive trade secrets, the logic of how you do business. Because MoolAI executes within your environment, this graph never leaks to a public cloud provider.

Conclusion

Security isn't just about stopping bad prompts. It's about proving why good decisions were made.

As we entrust AI with more autonomy, the ability to trace a decision back to its root cause becomes the defining requirement for enterprise adoption. Don't settle for a black box that works "most of the time." Build a Glass Box that works transparently, every time.

MoolAI updates Enterprise AI solutions every now and then to eliminate the bottlenecks in an existing solution with the most recent technology.

Reach out to us to know more.