Jan 13, 2026

Table of contents

Why should there be limitations in the level of automation while using LLMs in the Health Care Industry?

LLMs inform and not instruct – The MoolAI way

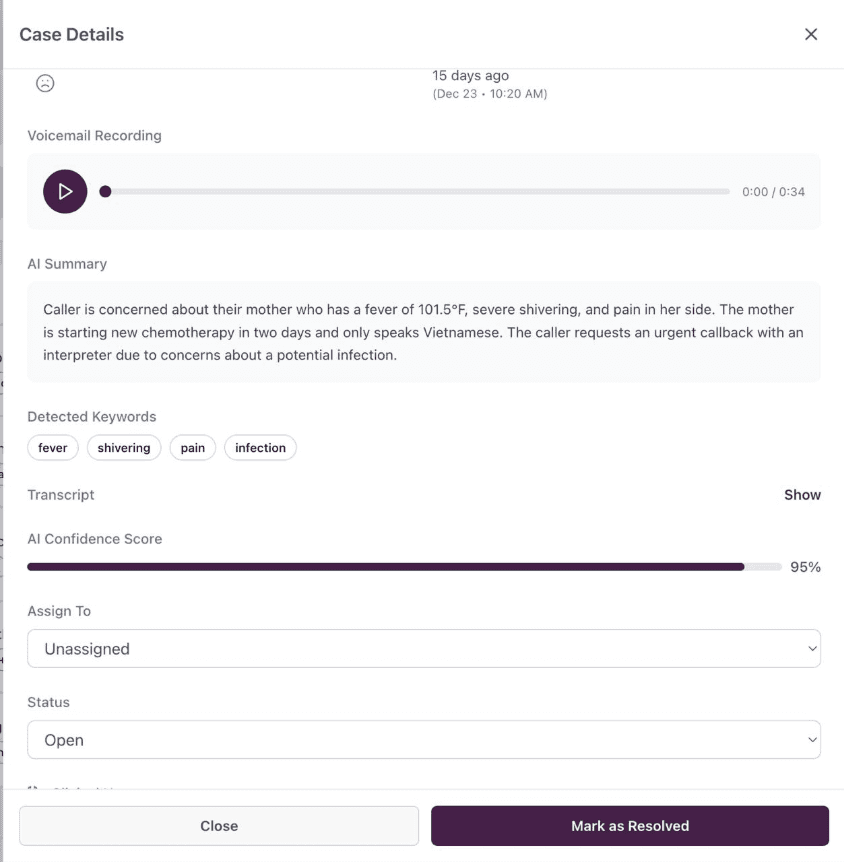

Below is MoolAI’s Case Details view

Unlike other industries, healthcare is one domain where end-to-end clinical processes are directly related to human life. Decisions such as assigning physicians and recommending diagnostic procedures should never be compromised, even to a minuscule extent, as this could lead to severe consequences and put trust at stake.

Consider the scenario where a patient leaves a voice mail stating that he is undergoing a chest congestion, and he assumes this is due acidity. The LLM by itself can categorize it as a minor health issue and assigns a regular medical practitioner for it. But when diagnosed with, the patient had a mild heart attack and by the time a specialist is assigned, his condition turns out to be more critical. This is a clear case where human intervention wasn’t recommended at the right stage.

For the Health care Industry, LLMs are to be architected to assist humans and not replace them.

This article explores those gaps; the hidden complexities that engineers, clinical informaticists, and healthcare leaders must understand before deploying LLMs in clinical settings.

Why should there be limitations in the level of automation while using LLMs in the Health Care Industry?

Hard-core automation can never be envisaged for the healthcare Industry. Here are a few reasons:

Irreversible losses:

In the case of Finance or Retail Industries, losses due to inaccurate responses by LLMs may lead to loss of revenue, unreliable data, or customer dissatisfaction that can be sorted out eventually. Even a minor deviation in interpreting patients’ symptoms can result in inappropriate treatment processes and an extended duration of recovery. Several allegations were a lack of human ownership would be scrutinized, leading to loss of trust.

Data Interpretation:

Enterprise data can be interpreted in a much more straightforward and systematic way when compared to the health care industry, where the data is context-heavy. It needs human intelligence to corelate patient’s history, symptoms, and side effects and arrive at the best treatment process. LLMs can assist but cannot decide.

Unsafe Hallucinations:

The probabilistic and “If”s and “but”s nature of LLM reasoning may deliver partial data that is not 100% accurate. This may not be much consequential in other industries. Health care data demands the best accuracy, aligning with clinical protocols.

Compliance and Auditability:

Each decision taken needs to be explained during Compliance audits. Complete automation using LLMs may fail during audit checks, leading to challenges in Compliance clearance and adherence to HIPAA regulations. We should also ensure that all Personally Identifiable Information (PII) is secure behind a layer of encryption at rest and a masked version is used by the LLM as per the latest HIPAA regulations

As far as reasoning is concerned, no amount of automation can match human intelligence and interpretation. Critical workflows should never bypass human checks in a health care Industry. MoolAI delivers a 100% fool proof Healthcare Enterprise AI solution where a perfect balance is attained between LLMs and manual intervention.

LLMs inform and not instruct – The MoolAI way

At MoolAI, LLMs are decision points where humans intervene, and not decision makers. There are workflow points where, if the situation demands, LLMs can be bypassed for human intervention straight.

Guardrails Embedded as Product Logic

In MoolAI, guardrails are not an add – on; they are embedded into the platform even at the foundation level. Validation layers constrain LLM outputs to predefined clinical and operational boundaries, ensuring responses remain safe, predictable, and auditable.

Open-ended prompts are replaced with structured decision flows that mirror real clinical protocols. This enables:

Repeatable and reproducible outcomes

Precise compliance audits

Adhering to established healthcare SOPs

The result is an AI system that is more of a governed clinical assistant.

Privacy, Compliance, and Trust

This separation of intelligence and identity ensures organizations can scale AI adoption without increasing security risk.

From Intelligence to Action, Safely

Healthcare AI succeeds only when insights translate into timely, safe action. By combining AI summarization, symptom detection, SLA tracking, and confidence scoring within a single governed interface, MoolAI enables health care teams to prioritize effectively, without surrendering control.

The product screenshot below shows the AI-generated clinical summary, detecting critical symptoms, confidence scoring, and human-controlled assignment and resolution.

AI Summary converts crude voicemail or text into a clinically relevant write-up.

Detected Keywords highlight potential red-flag symptoms without over-interpreting intent, ensuring reliability.

AI Confidence Score quantifies certainty, enabling informed escalation decisions.

Assignment and Status Controls ensure that all cases are resolved after human review.

Below is MoolAI’s Case Details view