Dec 21, 2025

Table of contents

The Hidden Risk

Why Current Approaches Fail ?

The MoolAI differentiator

The Technical Advantage

The Redaction Engine

Wrapping Up

A major threat anticipated with AI adoption is that critical data and sensitive personal information will be compromised, causing data leaks and ultimately resulting in loss of trust. Check if your vendor has robust data protection protocols eliminating any room for vulnerabilities.

In this article, you can understand potential risks involved due to exposed data, subsequent revenue losses, and how MoolAI stands out by prioritizing and preserving data privacy.

The Hidden Risk

Enterprises are currently rushing to integrate Large Language Models (LLMs) into their core operations, driven by the promise of automation and "agentic" capabilities. However, this speed often obscures an existential security gap. By connecting LLMs to internal data and external tools, organizations expose themselves to prompt injection, data leakage, and uncontrolled agent behaviour.

The reality is that most "security layers" currently on the market are reactive, shallow, or bolted on after the fact. They treat AI security as a content moderation problem rather than an architectural one. As highlighted by OWASP ranking prompt injection as the #1 LLM vulnerability, this is not a minor bug - it is a critical failure point that can turn an AI assistant into an internal threat vector.

Why Current Approaches Fail ?

The failure of common solutions—such as regex filters, keyword blockers, and API wrappers, stems from the fundamental architecture of Generative AI. LLMs operate on "in-band signaling," where system instructions (code) and user inputs (data) are flattened into a single stream of natural language.

Because the model lacks a rigorous mechanism to distinguish between the "control plane" and the "data plane," it suffers from the Confused Deputy problem. An attacker can disguise malicious commands as data, bypassing reactive filters entirely. Relying on the LLM to police itself or trusting raw user input is a structural flaw, not a configuration issue. As research indicates, even state-of-the-art models remain highly vulnerable to indirect injection and jailbreaks.

MoolAI addresses this by operating as a dedicated AI Gateway Platform that enforces security upstream, before any interaction with the LLM occurs. Rather than relying on the model’s probabilistic alignment, MoolAI functions as a deterministic secure AI firewall.

Our architecture intercepts, validates, and neutralizes malicious or manipulative content before it reaches the inference layer. This is a first-principles design, built to solve the "in-band" signaling problem by imposing a strict separation of duties. We do not simply filter bad words; we structurally prevent the model from executing unauthorized logic.

The MoolAI differentiator

The Technical Advantage

MoolAI’s defense is built on capabilities that are difficult to replicate without rebuilding the security stack from scratch:

Universal Model Agnosticism:

MoolAI abstracts the complexity of the AI landscape. You gain a single, unified interface to access any major LLM provider (OpenAI, Anthropic), open-source models, or our own specialized foundation models. This allows enterprises to switch models instantly to optimize for cost or performance without rewriting a single line of application code—a massive business advantage in a volatile market.

Deep Observability & Forensics:

Security requires visibility. MoolAI provides granular, real-time audit logs for every interaction flowing through the gateway. This allows security teams to trace prompt injection attempts, PII leakage risks, and usage anomalies down to the individual user level, transforming a "black box" AI system into a fully auditable asset.

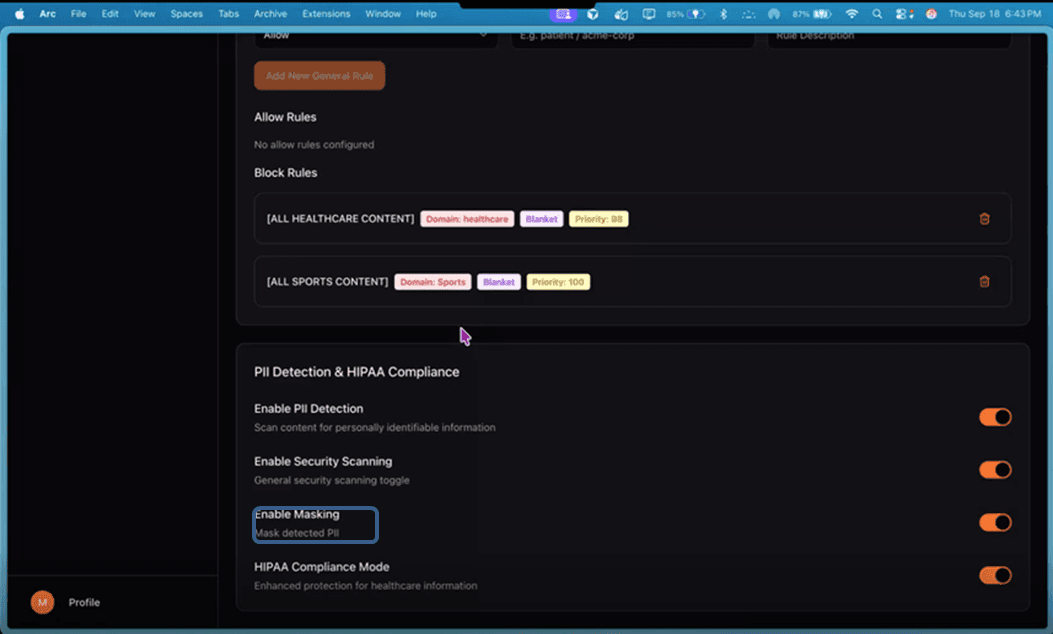

Complete PII/PHI Masking: We integrate a high-fidelity redaction engine directly into the gateway. MoolAI is able to completely mask any PII (Personally Identifiable Information) and PHI (Protected Health Information) data in real-time, ensuring that sensitive identifiers never leave your secure perimeter or reach external model providers.

Client-Environment Execution: The entire MoolAI architecture is capable of running entirely inside the client’s environment. This negates the latency and exposure risks of cloud-proxy solutions

The Redaction Engine

To understand the architectural difference, consider a common scenario where an employee accidentally attempts to process sensitive customer data.

The Scenario:

A customer support agent pastes a raw chat log into an AI assistant to generate a summary. The log contains a client's private email and phone number.

Without MoolAI (Direct API):

Input: "Summarize this complaint from john@moolai.ai at 555-0123."

Result: The raw PII is sent directly to the public model provider's servers. The data is now exposed to third-party logging and potential training sets, constituting an immediate compliance breach.

With MoolAI (Gateway Architecture):

Input: "Summarize this complaint from john@moolai.ai at 555-0123."

Gateway Action: The MoolAI engine intercepts the payload before it leaves the perimeter. It detects the sensitive entities and applies deterministic masking.

Image: Sanitized output to LLM

Sanitized Output to LLM: "Summarize this complaint from <EMAIL_MASKED> at <PHONE_MASKED>."

Result: The AI generates the summary using the context, but the sensitive identities never left the secure environment. The breach is structurally impossible.

Zero Dependency

The strategic advantage of running fully within the client’s infrastructure cannot be overstated. For regulated industries and mission-critical environments, data sovereignty is non-negotiable.

MoolAI guarantees compliance with strict regulatory frameworks, including SOC 2 Type II and HIPAA.

By ensuring that sensitive data never leaves your controlled perimeter, you are not just renting security; you are owning a secure infrastructure that withstands regulatory scrutiny.

Wrapping Up

As AI systems transition from passive chatbots to autonomous agents capable of executing code and transactions, the stakes for security failures will rise exponentially. Shallow defenses will collapse under the weight of complex, multi-step agentic attacks.

MoolAI is not built for short-term demos. It is an architecture engineered for the next decade of autonomous computing. We provide the structural integrity required to deploy high-stakes AI with absolute confidence.

Don’t negotiate on security and compliance. MoolAI will be your trusted long-term AI partner where your data is hosted and secured in your own environment.